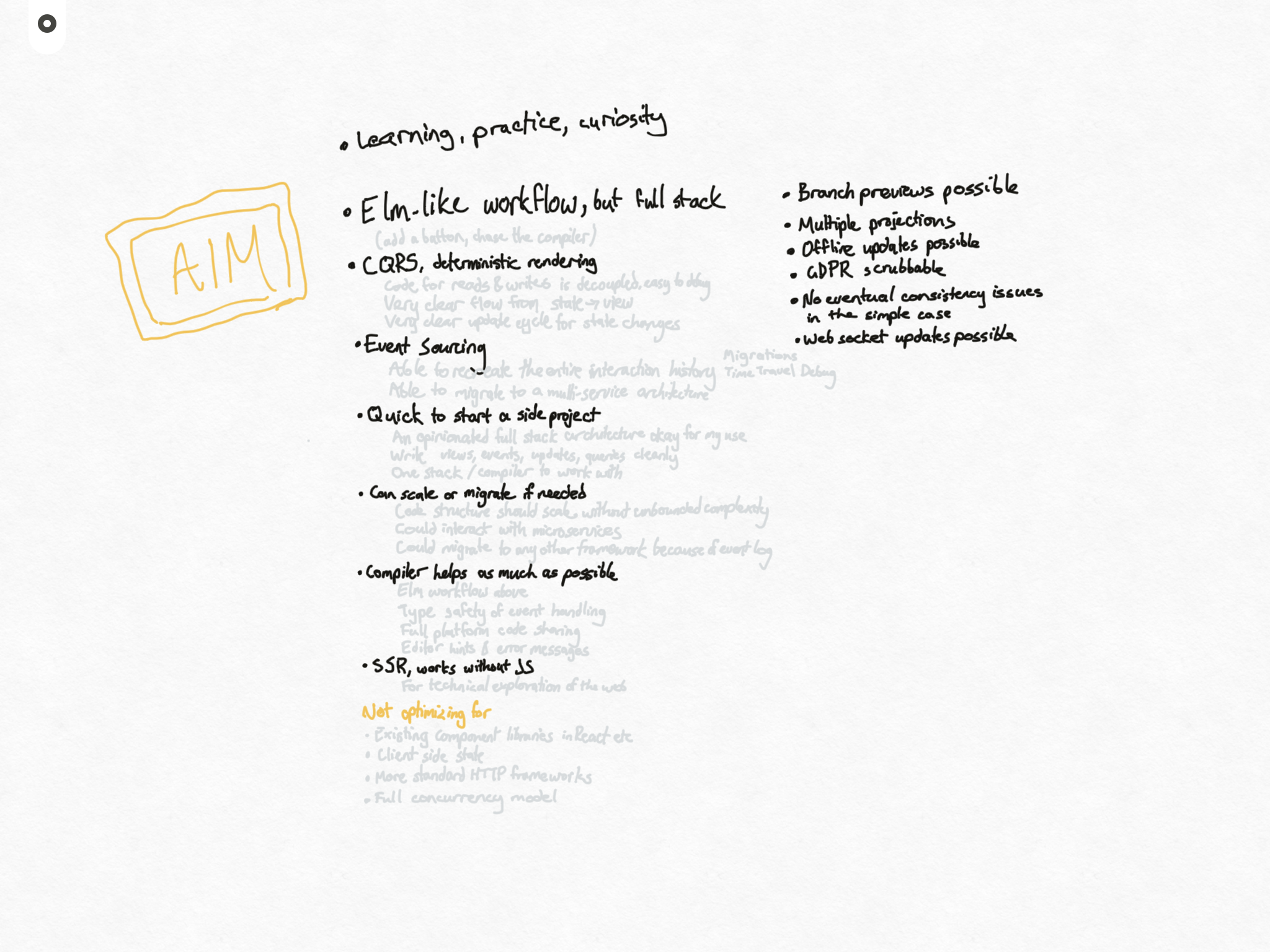

A look at recurring architectural patterns I see in both front end and back end, that have potential to tie together into a full stack framework.

In my last post I said part of my reason for wanting to write another web framework was that I’ve been exposed to similar ideas in both the front end and back end, and wanted to experiment with an architecture that ties it all together.

In this post, I’m going to explore those: Unidirectional data flow, the elm architecture, CQRS and event sourcing. And then I’ll look at the common themes I see tying them together.

State, views, and one-directional data flow

Almost every popular front end architecture I’ve encountered recently shares an idea: you have data representing the current state of your page, and you use that state to render the view that the user can see and interact with.

If you want to change something on the page, you don’t update the page directly, you update the state, and that causes the view to update.

You might have heard this described in a few ways:

- Data Down, Actions Up: the data flows down from the state to the view. And the actions come up from the view to modify the state.

- Model, View, Controller: Your have a data “model” layer that holds information about the current state, and a “view” layer that knows how to update it, and a “controller” that does the communication in the middle.

- Unidirectional data flow: You often hear this term in the React ecosystem. Data flows from the top of your view down into it the nested components. So a component only knows about the data passed into it, and nothing else. The data always flows downwards. How do you change the data then? As well as passing down the data from the state, we also pass down a function that can be used to update the state.

This pattern is used in frameworks as diverse as React and Ember and Elm. Why is it so common? Here’s some of the advantages it provides:

- Each function in your code has one job: turn the state into a view, or to update the state in response to an action. This makes it nice and easy to wrap your head around an individual piece of code.

- The functions don’t need to know about each other. If you have a “to-do list” app, and an action that adds a new to-do item – you don’t need to know the 3 different places it’ll show up in the UI and change them all – you just update the state. Likewise, if you want to add a new view, you don’t have to touch all the places that edit the “to-do” list, you can just look at the current state, however it came to be, and use that data to render your page.

- It becomes easy to debug. If there’s a bug, you can check if the state data is correct. If the state data looks correct, then the bug will be in one of your view functions. If that state data looks wrong, then the bug is probably in one of your action functions that change the state.

- It makes it easier to write tests. Your action functions test simple things: if our state looks like this, and we perform this action, then the state should now look like that. That’s easy to write a unit test for. And for your view functions, you can write tests that use mocked state data to test all the different ways your view might be rendered. You can even create “stories” with Storybook, or take visual snapshots, for quick visual tests of many different ways the UI looks.

The Elm architecture

Elm takes this concept to the extreme. By making a language and a framework that are tightly integrated, they can force you to follow the good advice from “data down, action up”.

The Elm architecture has four parts:

- Model – the state of your application,

- View – a way to turn your state into HTML,

- Messages – a message triggered by the UI (like clicking a button) that has information about an action the user is requesting (like attempting to mark an item as complete)

- Update – a way to update your state based on “messages”

The Elm Architecture.

State. The page has some current state that is used to render the view. We use the type system to make sure the structure of this is consistent. In this example, it might have a title “My work” and a list of tasks like “Plan week” and “Check Slack”

View. Render HTML based on the current state. Following on the example above, we might have a view function that receives our state, and calls functions to render a

<h1> element and a <ul> with the list of tasks, and a form for adding new to-do items.Html. We never manually edit HTML or the DOM, we just update state, which updates the view, and the framework checks what HTML needs changing. In the example above, this would be the rendered

h1, ul and form HTML / DOM produced by the framework.Update. The only type of events we trigger from the HTML view are strictly typed and exactly what our update function is expecting. When an action happens, the framework uses the update function to consider what the new state should be based on the previous state and the action that occurred. Following on the example, an “AddTodo” action might append a new item to the list. While the “CompleteTask” action might remove an item from the list. The only way to update the state is to use the update function, one action at a time. This makes state management easy to unit test and debug!

There is also the opportunity to interact with the outside world – things like a Backend API. Elm provides a way to do this from the Update function (which can in turn trigger new actions) but it doesn’t have strong opinions on what happens in the Backend API.

And Elm will enforce this. You can only update the view of the page via your “view” function. Your “view” function only has access to the model to decide what to render. The only actions your view can trigger are the list of messages you define. And you can only update the state in the model using your update function to change things as messages come through.

And Elm has a fantastic type system and compiler to make this all work really nicely together. To show how it works, imagine you have a “to-do list” and you have a button to mark an item as complete:

- In your “view” function where you render the button, you can set an “onClick” event.

- The “onClick” event will trigger a message that something has happened. You decide the names of the possible messages that can happen, so we might chose a name like “MarkToDoAsComplete”.

- Because we have told Elm we have a message called “MarkToDoAsComplete”, it will force us to handle this code in our “update” function.

- In our “update” function we write the code that updates the model, setting the current to-do as complete.

- When a user views the page they see the button. When they click the button, the message is triggered, the update function is run, the model is updated with the to-do item now being marked as complete, and the view will update in response. By the time we did all the things the compiler asks, it all just worked.

The great thing about this is that Elm knows exactly what code is needed for all the pieces of your application to work. If you’re missing anything, it will give you a nice error message showing what to fix. This means that you never get runtime errors in your Elm code.

But even nicer than that, it means you have a great workflow:

- You add your button, and a “MarkToDoAsComplete” message

- The compiler tells you that message needs to be added to your list of app messages. You do that.

- The compiler tells you that your “update” function needs to handle the message. You do that.

- It now all works.

This “chase the compiler” workflow is what originally got me excited about the Elm language, not just the architecture – you can see Kevin Yank’s talk “Developer Happiness on the Front End with Elm” for a more detailed overview.

(As a bonus, if you do spot anything wrong, the strict framework for updating state based on actions, one at a time, allows powerful debugging tools like “time travel debugging”, where you can replay events one at a time to see their effect.)

Command Query Responsibility Separation (CQRS)

On the back end, we sometimes find a similar pattern to “data down, actions up”. It’s called “Command Query Responsibility Separation”. You separate the queries (data) and the commands (actions) into separate code paths, separate API endpoints, or even separate services.

If your back end uses an SQL database, you can think of the “queries” using SELECT statements, and the commands using INSERT or UPDATE statements.

We use separate code paths for Commands (writes) and Queries (reads). Rather than having an API return new data after a command, we have the client repeat the full query.

And you end up with similar advantages:

- Each endpoint has one job.

- The endpoints don’t need to know about each other.

- It becomes easier to debug.

- The command endpoints and the query endpoints can adopt different scaling strategies. For example caching can be applied to the “query” endpoints.

Event Sourcing

When we talked about the Elm architecture, we saw a front end framework with a strict way to update the current state: by processing one message at a time. Event sourcing brings a similar concept to our back end, and crucially, to our data and our “source of truth”.

It’s normal for the “source of truth” in a web application to be a database that represents the current state of all of your data. For a todo list, you might have a row for each todo item, and columns to set the text of the item, whether it is complete or not, and the order it appears in the list.

That table would be your source of truth.

Event Sourcing – changing the source of truth. Traditionally in a web application the “source of truth” might be a database table that represents the current state of the application. In event sourcing, the source of truth is an event log: all of the actions that occurred, one at a time. We can use this event log to build up a view or the current state.

Event sourcing is about changing the “source of truth” to be the events that occurred. Rather than caring exactly which todo items currently exist, and if they are complete, we care about when a user created a task, or marked a task as complete, or changed the order of the tasks in a list. These are the “events”, and they are our “source” of truth.

And we can process them, one event at a time, to build up a view of the data (in event sourcing these are often called “projections” of the data). Some projections might look very similar to what we had before – a database table with a row for each todo item, a column for the item text, whether it is complete, and the order in the list.

The power of event sourcing is that we can also create other views of the data. Perhaps we want to create a trend line graph showing how many open tasks we’ve had over time. If our source of truth was the current state, we wouldn’t be able to tell you how many tasks you had open last week (or this week last year!) With event sourcing, we can go back over all of the events, and build new views of the data.

Client actions. We can start with actions that are triggered by the user. Event sourcing as a pattern has no strong opinions on how this is handled.

Command handler. A service takes the action coming in from the client (like ticking off a task) and decides if it is allowed. If it is, it adds it to the event log.

Event log. A log of all the events that have occurred. Often this is in a database table. In the diagram above, we show 4 events: “Create Task”, with id “1” and task “Draw Diagram”. Then “Create Task” with id “2” and task “Write post”. Then “Complete Task” with id “1”. (Meaning “Draw Diagram” is complete). Then “Create Task” with id “3” and task “Clean dishes”.

Here the diagram splits into two, because rather than just having the task list, we can process the events and generate other interesting data. These “projections” are different data to derived from the same events.

We have a “Your Tasks projection” that is a database table with “id”, “task” and “complete” columns. Based on the events, Task 1 “Draw diagram” is complete, while 2 “Write post” and 3 “Clean dishes” are not. This could be used to render a traditional to-do list UI.

And we have an “Open Tasks Graph projection” with two columns, “date” and “open tasks’. It shows how for any given date, we can see how many tasks were opened. We could use this t draw a trend line graph.

Similar to the front end with actions and state, this also opens up some powerful debugging options. We can replay the events and “time travel” to see exactly how our system responded, and look out for points where things may have gone astray.

Bringing it together: the common concepts I want my “Small Universe” framework to draw on

You probably spotted some similar themes running through the above architectures:

- Keeping code to fetch data and code to process actions separate

- Having a way to get the “current state” for a page from our data

- Always rendering the pages based on that current state

- Allowing the views to trigger actions or “events”

- These events being tracked, and considered our source of truth

- Responding to one event at a time to update our application data

Following these allows us to write code which is simpler – each function is focused on either fetching data for the current view, displaying the current view, or processing an action. That makes code easier to understand, easier to test, and simpler to debug.

Making sure our data is updated one action at a time also opens up potential for time travel debugging, which is an incredibly powerful feature for you as a developer when you’re investigating how something went wrong. It also leaves the door open for new features that you can build, being able to take full advantage of all the past user actions.

Finally, by strictly defining the shape of your state, and the set of actions (or “events” or “messages”) that are possible, you can have the framework and compiler do a lot of work for you, ensuring that if a button exists, it has an action, and the action updates the state, and the view reflects the updated state.

So you can find a very productive workflow where you start adding one new line of code for your feature, and the compiler will guide you all the way to completing the feature as valid code, and you can be relatively confident it’ll work.

So, for this “Small Universe” framework I’m starting, I am taking inspiration from these architectures to try build something that leads you to write code that is easy to write, understand, test and debug. Something that uses events as a source of truth to make it easy to build new features that process previous actions into features or views we hadn’t imagined up front. And something that leads to a happy and productive workflow, with the compiler able to provide ample assistance as you build out new features.

I’ll come back to describe the architecture I’m aiming for in a future post. But I hope this helps you understand the direction from which I’m approaching this project.

Have any questions? Things that weren’t clear? Ideas you want to share? I’d love to hear from you in the comments!