Complex Routing Schemes with haxe.web.Dispatch

I’ve looked at haxe.web.Dispatch before, and I thought it looked really simple – in both a good and a bad way. It was easy to set up and fast. But at first it looked like you couldn’t do complex URL schemes with it.

Well, I was wrong.

At the WWX conference I presented some of the work I’ve done on Ufront, a web application framework built on top of Haxe. And I mentioned that I wanted to write a new Routing system for Ufront. Now, Ufront until now has had a pretty powerful routing system, but it was incredibly obese – using dozens of classes, thousands of lines of code and a lot of runtime type checking, which is usually pretty slow.

Dispatch on the other hand uses macros for a lot of the type checking, so it’s much faster, and it also weighs in at less than 500 lines of code (including the macros!), so it’s much easier to comprehend and maintain. To wrap my head around the ufront framework took me most of the day, following the code down the rabbit hole, but I was able to understand the entire Dispatch class in probably less than an hour. So that is good!

But there is still the question, is it versatile enough to handle complex URL routing schemes? After spending more time with it, and with the documentation, I found that it is a lot more flexible than I originally thought, and should work for the vast majority of use cases.

Learning by example

Take a look at the documentation to get an idea of general usage. There’s some stuff in there that I won’t cover here, so it’s well worht a read. Once you’ve got that understood, I will show how your API / Routing class might be structured to get various URL schemes:

If we want a default homepage:

function doDefault() trace ( "Welcome!" );

If we want to do a single page /about/:

function doAbout() trace ( "About us" );

If you would like to have an alias route, so a different URL that does the same thing

inline function doAboutus() doAbout();

If we want a page with an argument in the route /help/{topic}/:

function doHelp( topic:String ) {

trace ( 'Info about $topic' );

}

It’s worth noting here that if topic is not supplied, an empty string is used. So you can check for this:

function doHelp( topic:String ) {

if ( topic == "" ) {

trace ( 'Please select a help topic' );

}

else {

trace ( 'Info about $topic' );

}

}

Now, most projects get big enough that you might want more than one API project, and more than one level of routes. Let’s say we’re working on the haxelib website (a task I might take on soon), there are several pages to do with Projects (or haxelibs), so we might put them altogether in ProjectController, and we might want the routing to be available in /projects/{something}/.

If we want a sub-controller we use it like so:

/*

/projects/ => projectController.doDefault("")

/projects/{name}/ => projectController.doDefault(name)

/projects/popular/ => projectController.doPopular

/projects/bytag/{tag}/ => projectController.doByTag(tag)

*/

function doProjects( d:Dispatch ) {

d.dispatch( new ProjectController() );

}

As you can see, that gives a fairly easy way to organise both your code and your URLs: all the code goes in ProjectController and we access it from /projects/. Simple.

With that example however, all the variable capturing is at the end. Sometimes your URL routing scheme would make more sense if you were to capture a variable in the middle. Perhaps you would like:

/users/{username}/

/users/{username}/contact/

/users/{username}/{project}/

Even this can still be done with Dispatch (I told you it was flexible):

function doUsers( d:Dispatch, username:String ) {

if ( username == "" ) {

println("List of users");

}

else {

d.dispatch( new UserController(username) );

}

}

And then in your username class:

class UserController {

var username:String;

public function new( username:String ) {

this.username = username;

}

function doDefault( project:String ) {

if ( project == "") {

println('$username\'s projects');

}

else {

println('$username\'s $project project');

}

}

function doContact() {

println('Contact $username');

}

}

So the username is captured in doUsers(), and then is passed on to the UserController, where it is available for all the possible controller actions. Nice!

As you can see, this better represents the heirarchy of your site – both your URL and your code are well structured.

Sometimes, it’s nice to give users a top-level directory. Github does this:

http://github.com/jasononeil/

We can too. The trick is to put it in your doDefault action:

function doDefault( d:Dispatch, username:String ) {

if ( username == "" ) {

println("Welcome");

}

else {

d.dispatch( new UserController(username) );

}

}

Now we can do things like:

/ => doDefault()

/jason/ => UserController("jason").doDefault("")

/jason/detox => UserController("jason").doDefault("detox")

/jason/contact => UserController("jason").doContact()

/projects/ => ProjectController.doDefault("")

/about/ => doAbout()

You can see here that Dispatch is clever enough to know what is a special route, like “about” or “projects/something”, and what is a username to be passed on to the UserController.

Finally, it might be the case that you want to keep your code in a separate controller, but you want to have it stay in the top level routing namespace.

To use a sub-controller add methods to your Routes class:

inline function doSignUp() (new AuthController()).doSignUp();

inline function doSignIn() (new AuthController()).doSignIn();

inline function doSignOut() (new AuthController()).doSignOut();

This will let the route be defined at the top level, so you can use /signup/ rather than /auth/signup/. But you can still keep your code clean and separate. Winning. The inline will also help minimise any performance penalty for doing things this way.

Comparison to Ufront/MVC style routing

Dispatch is great. It’s light weight so it’s really fast, and will be easier to extend or modify the code base, because it’s easier to understand. And it’s flexible enough to cover all the common use cases I could think of.

I’m sure if I twisted my logic far enough, I could come up with a situation that doesn’t fit, but for me this is a pretty good start.

There are three things the old Ufront routing could do that this can’t:

- Filtering based on whether the request is Post / Get etc. It was possible to do some other filtering also, such as checking authentication. I think all of this can be better achieved with macros rather than the runtime solution in the existing ufront routing framework.

- LocalizedRoutes, but I’m sure with some more macro love we could make even that work. Besides, I’m not sure that localized routes ever functioned properly in Ufront anyway ;)

- Being able to capture “controller” and “action” as variables, so that you can set up an automatic

/{controller}/{action}/{...} routing system. While this does make for a deceptively simple setup, it is in fact quite complex behind the scenes, and not very type-safe. I think the “Haxe way” is to make sure the compiler knows what’s going on, so any potential errors are captured at compile time, not left for a user to discover.

Future

I’ll definitely begin using this for my projects, and if I can convince Franco, I will make it the default for new Ufront projects. The old framework can be left in there in case people still want it, and so that we don’t break legacy code.

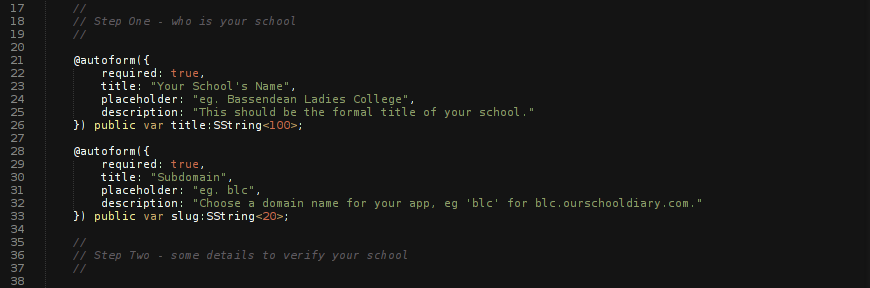

I’d like to implement a few macros to make things easier too.

So

var doUsers:UserController;

Would automatically become:

function doUsers( ?d:Dispatch, username:String ) {

d.dispatch( new UserController(username) );

}

The arguments for “doUsers()” would match the arguments in the constructor of “UserController”. If “username” was blank, it would still be passed to UserController.default, which could test for a blank username and take the appropriate action.

I’d also like:

@:forward(doSignup, doSignin, doSignout) var doAuth:AuthController;

To become:

function doAuth( ?d:Dispatch ) {

d.dispatch( new AuthController() );

}

inline function doSignup() (new AuthController()).doSignup();

inline function doSignin() (new AuthController()).doSignin();

inline function doSignout() (new AuthController()).doSignout();

Finally, it would be nice to have the ability to do different actions depending on the method. So something like:

@:method(GET) function doLogin() trace ("Please log in");

@:method(POST) function doLogin( args:{ user:String, pass:String } ) {

if ( attemptLogin(user,pass) ) {

trace ( 'Hello $user' );

}

else {

trace ( 'Wrong password' );

}

}

function doLogin() {

trace ( "Why are you using a weird HTTP method?" );

}

This would compile to code:

function get_doLogin() {

trace ("Please log in")

}

function post_doLogin( args:{ user:String, pass:String } ) {

if ( attemptLogin(user,pass) ) {

trace ( 'Hello $user' );

}

else {

trace ( 'Wrong password' );

}

}

function doLogin() {

trace ( "Why are you using a weird HTTP method?" )

}

Then, if you do a GET request, it first attempts “get_doLogin”. If that fails, it tries “doLogin”.

The aim then, would be to write code as simple as:

class Routes {

function doDefault() trace ("Welcome!");

var users:UserController;

var projects:ProjectController;

@:forward(doSignup, doSignin, doSignout) var doAuth:AuthController;

}

So that you have a very simple, readable structure that shows how the routing flows through your apps.

When I get these macros done I will include them in ufront for sure.

Example Code

I have a project which works with all of these examples, in case you have trouble seeing how it fits together.

It is on this gist.

In it, I don’t use real HTTP paths, I just loop through a bunch of test paths to check that everything is working properly. Which it is… Hooray for Haxe!