I want to start capturing my notes from important articles I’m reading about the impact of AI.

AI Doesn’t Reduce Work—It Intensifies It

by Aruna Ranganathan and Xingqi Maggie Ye

While this may sound like a dream come true for leaders, the changes brought about by enthusiastic AI adoption can be unsustainable, causing problems down the line. Once the excitement of experimenting fades, workers can find that their workload has quietly grown and feel stretched from juggling everything that’s suddenly on their plate. That workload creep can in turn lead to cognitive fatigue, burnout, and weakened decision-making.

They named 3 ways it intensifies

- “Task expansion” – things that wouldn’t have been in their role scope before they might try now. Eg engineers drafting communication, or designers writing code. This leads to more work for yourself, but also more work for those who have to assist or review the out-of-scope thing you’re doing.

- “Engineers increasingly found themselves coaching colleagues who were “vibe-coding” and finishing partially complete pull requests. This oversight often surfaced informally—in Slack threads or quick desk-side consultations—adding to engineers’ workloads.”

- “Blurred boundaries between work and non-work” – “Many prompted AI during lunch, in meetings, or while waiting for a file to load. Some described sending a “quick last prompt” right before leaving their desk so that the AI could work while they stepped away.” Being able to direct an agent from your phone blurs the lines significantly. 100% people can now work from their toilet breaks 😂😭

- “More multitasking” – you’re more tempted to start several tasks and switch between them, giving you a sense of powering through your backlog. But the extreme context switching has a cost.

- “While this sense of having a ‘partner’ enabled a feeling of momentum, the reality was a continual switching of attention, frequent checking of AI outputs, and a growing number of open tasks. This created cognitive load and a sense of always juggling, even as the work felt productive.”

- The words felt productive remind me of this study. I suspect there is more of an AI speedup now, but the mind blowing thing here was that engineers predicted they’d be more productive, and reported that they were more productive, even when the observed results were that they were less productive. Our “feeling” of productivity isn’t a good one to trust.

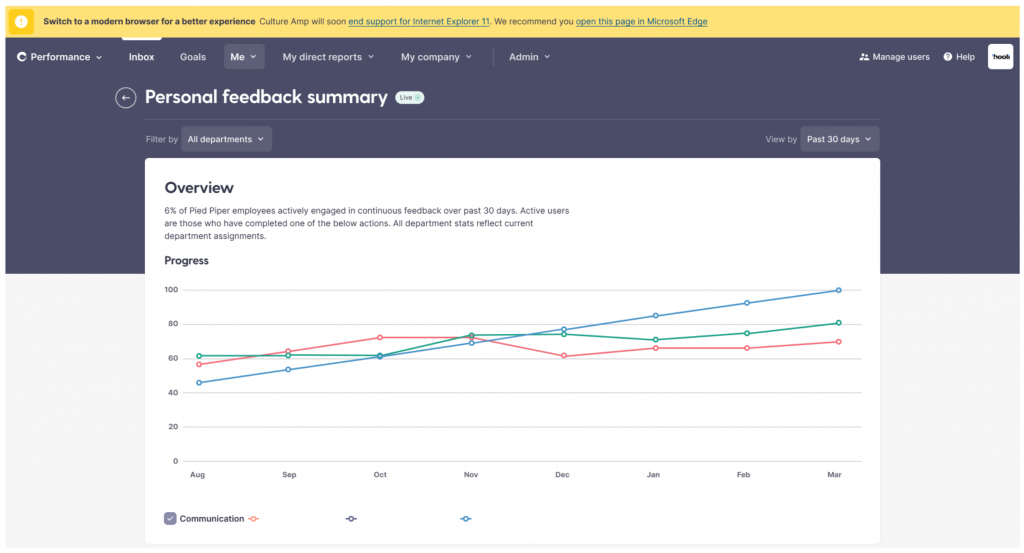

For Culture Amp especially, we care about these kinds of problems. A lot of our research is into how to have both a “high performance” and a “high engagement” culture (with wellbeing and sustainability being a key part of the engagement measure). If you get both right it’s incredible for the company. If AI helps you be high performing, but not in a sustainable way, then you become strained, and it’s not a good long term win for the company.

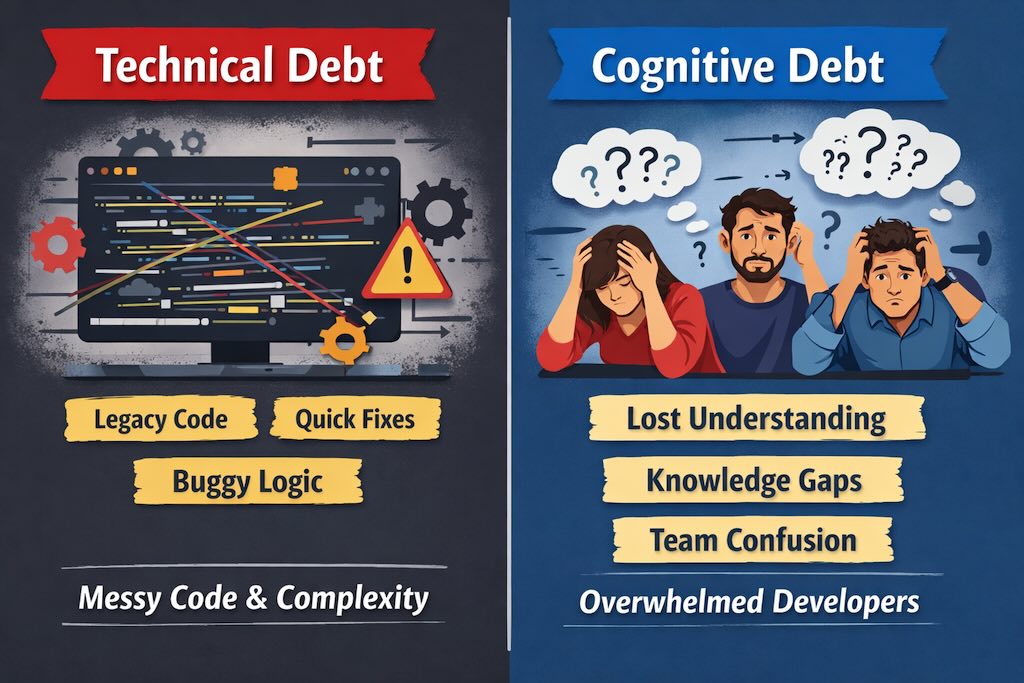

How Generative and Agentic AI Shift Concern from Technical Debt to Cognitive Debt

By Margaret-Anne Storey

Even if AI agents produce code that could be easy to understand, the humans involved may have simply lost the plot and may not understand what the program is supposed to do, how their intentions were implemented, or how to possibly change it.

She gives a simple example from a student team losing understanding of what they’ve built:

But by weeks 7 or 8, one team hit a wall. They could no longer make even simple changes without breaking something unexpected. When I met with them, the team initially blamed technical debt: messy code, poor architecture, hurried implementations. But as we dug deeper, the real problem emerged: no one on the team could explain why certain design decisions had been made or how different parts of the system were supposed to work together. The code might have been messy, but the bigger issue was that the theory of the system, their shared understanding, had fragmented or disappeared entirely. They had accumulated cognitive debt faster than technical debt, and it paralyzed them.

Our team has talked about how architectural decisions made when vibe coding are usually not good long term decisions. We talked about a playbook that looks like:

- Vibe code a v1 to learn as much as we can (about the problem, technical approach, UX issues etc)

- Don’t waste time polishing the v1 and making the code nice, we’ll throw it out

- For v2, plan your architecture and abstractions really carefully based on what you learned in the vibe-coded v1.

- Write out key types and function signatures yourself

- Document edge cases, business logic decisions, things we want tests for.

- Get review from another engineer on this plan

- Then get an agent to help build out v2

- And this time do the work to clean the code up and make it nice. But it’ll be building within the abstractions you planned.

One other idea that comes to mind is keeping a documented understanding of the project in the README (or elsewhere in the repo) that includes:

- What it is and what it does

- The problems and constraints it solves for

- The architecture and key abstractions chosen

- The approach to automated testing, and what manual testing is required

You could then make your agent coding process include reading this context at the start of a session, and writing it up at the end of a session.

Anyway – they’re ideas at solutions, but I think this article is interesting for naming the problem: Cognitive Debt, when it doesn’t even matter if the source code is in good shape or not because there’s no understanding of what the thing is, or how it’s supposed to work.

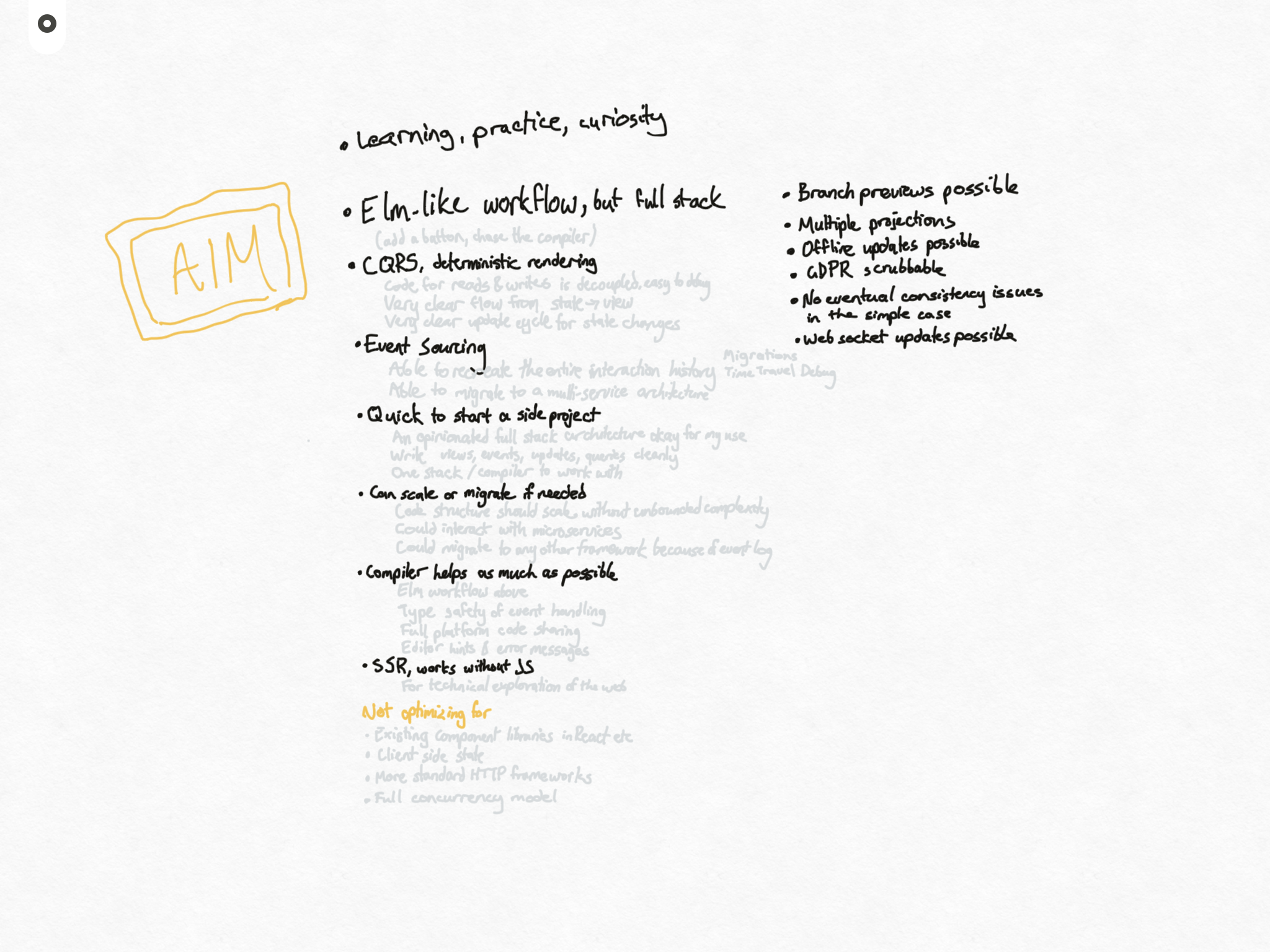

Harness Engineering

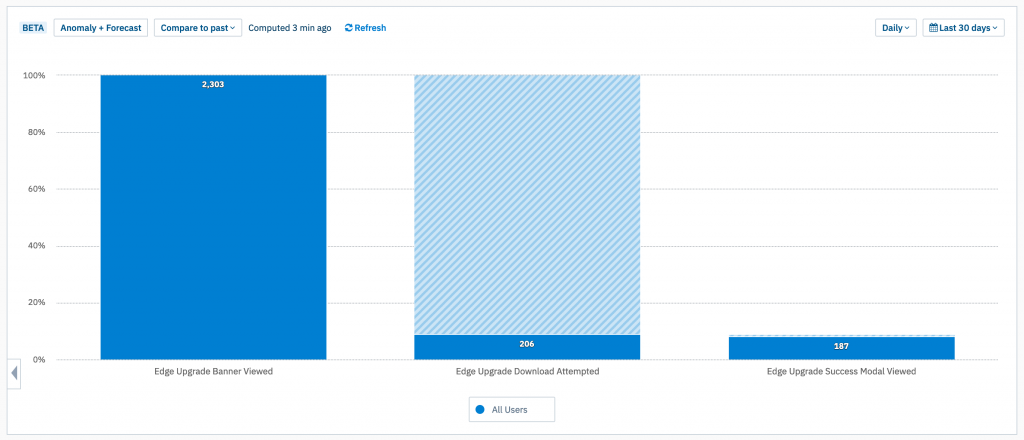

It was very interesting to read OpenAI’s recent write-up on “Harness engineering” which describes how a team used “no manually typed code at all” as a forcing function to build a harness for maintaining a large application with AI agents. After 5 months, they’ve built a real product that’s now over 1 million lines of code.

I hear about people doing this more, and I think it’s going to require a fair bit of supporting structure (a “harness” they’re calling it) to make sure the agent builds in a sustainable way and can stay useful even as it grows.

The categories she pulls from their post are interesting:

The OpenAI team’s harness components mix deterministic and LLM-based approaches across 3 categories (grouping based on my interpretation):

- Context engineering: Continuously enhanced knowledge base in the codebase, plus agent access to dynamic context like observability data and browser navigation

- Architectural constraints: Monitored not only by the LLM-based agents, but also deterministic custom linters and structural tests

- “Garbage collection”: Agents that run periodically to find inconsistencies in documentation or violations of architectural constraints, fighting entropy and decay

I’ve put most of my AI thinking into context engineering so far, and have started pondering what architectures are going to be more successful. For example, offering abstractions that help keep code modularised so agents can navigate it effectively within their context windows, or providing better feedback loops so it can iterate towards a solution rather than write code and hope. The third one – “garbage collection”, I’ve hardly started to consider.